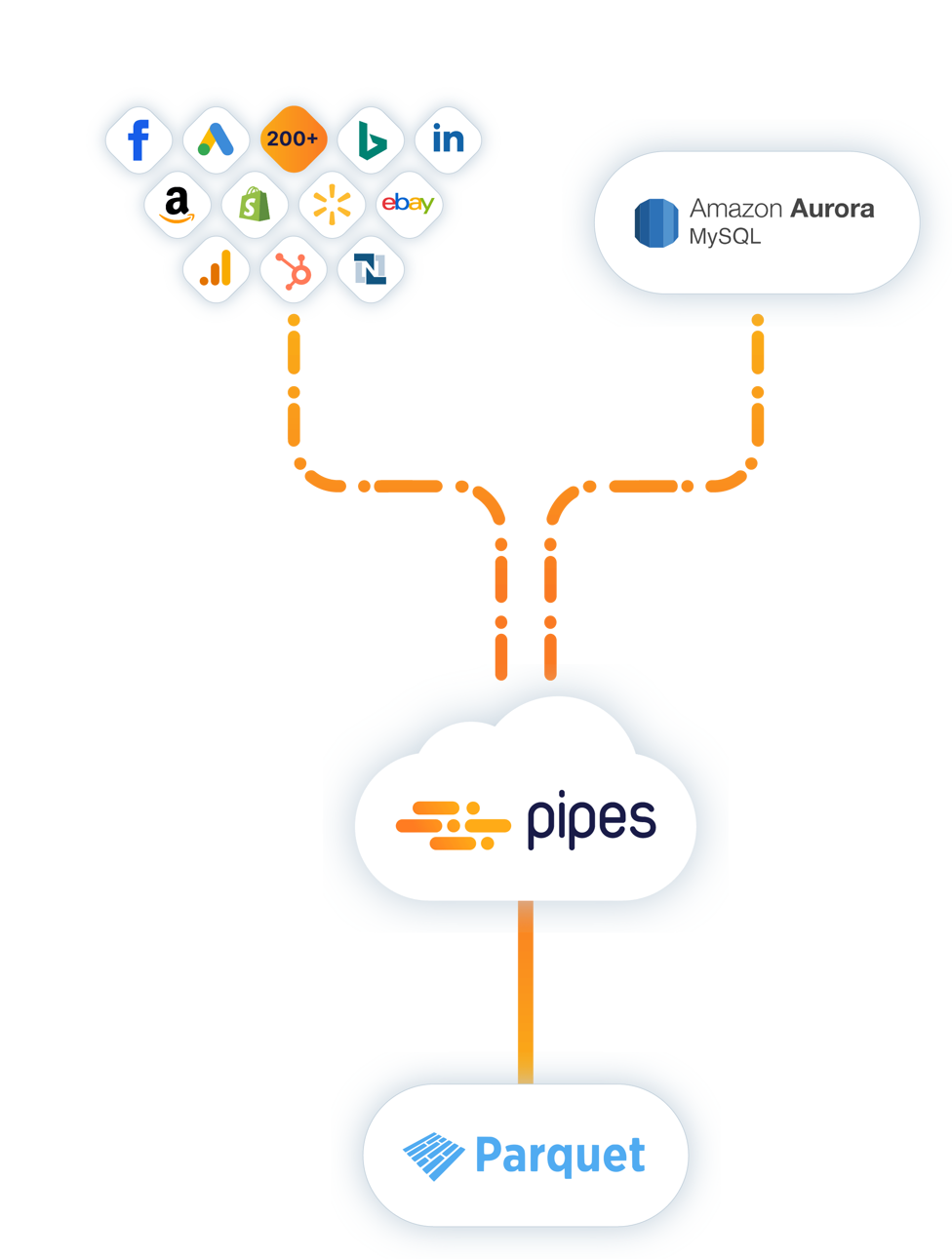

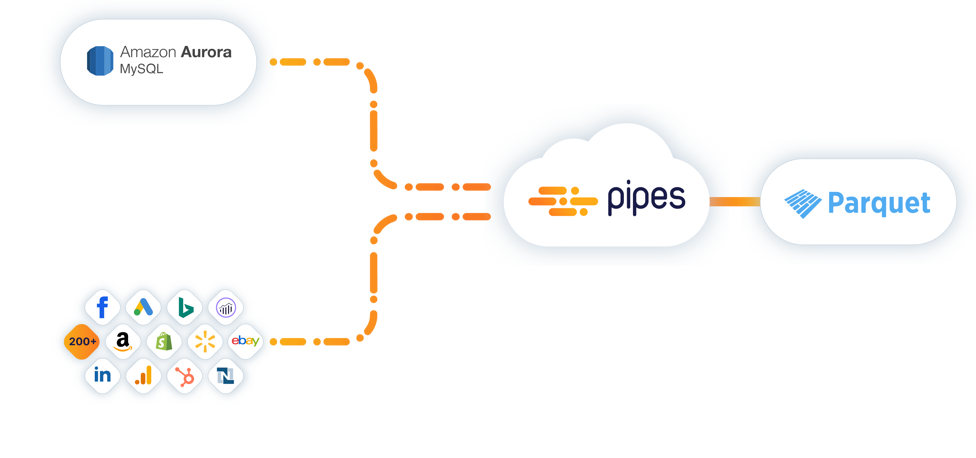

Load all your data from Amazon Aurora (MySQL Edition) to Parquet File to instantly get access to your Amazon Aurora (MySQL Edition) data. This data will always be updated on your defined schedule.

Use Cases

Explore how you can use Pipes in different scenarios.

Learn

Learn more about Pipes with customer stories, useful blog posts and the documentation.

Customer Case Study

Boosted Commerce automates all data integration processes with Pipes Professional.

Customer Case Study

SM Nutrition saves resources and costs through a lean inventory management.

Documentation

Read through the Pipes documentation here.

Blog

Find useful guides and further informative articles in our blog.

Customer Case Study: How Boosted Commerce automated all data processes

Customer Case Study: How Boosted Commerce automated all data processesLearn how Boosted Commerce quickly evolved into one of the world’s largest brand aggregators by automating all data integration processes with Pipes Professional